Abstraction

Abstracting away problems is the engine of progress.

There's a popular activity in computer science 101 classes that involves no computers. It's simple to set up: The professor stands in front of the class with a jar of peanut butter, a jar of jam, a bag of bread, and a knife. Then she asks the students to tell her how to make a PB&J sandwich.

Inevitably, a student offers an instruction like "put the peanut butter on the bread," and watches in dismay as the professor picks up the entire jar of unopened peanut butter and places it on top of the bread — the same way a computer would if given only that command.

Computers are stupid.

The peanut butter exercise is effective, because the real goal of any good introductory computer science course isn't to teach you how program a computer. It's to teach you how to think like one, so that you can more effectively break down problems into steps for the computer to take.

Computers are literal machines and have to be told in excruciating detail exactly what to do:

"Pick up the peanut butter jar with your left hand. Using your other hand, twist the cap off the jar. Then pick up the knife and insert it into the top of the jar..."

Computers have gotten better over time, because programmers and end users have increasing levels of abstraction that make them easier to use. At their core, computers operate in binary — millions of ones and zeros that drive their logic.

Telling a computer what to do with only ones and zeros is really hard, so we devise ways of typing instructions that are a little closer to natural language. Every programming langauge is a layer of abstraction on top of the underlying binary. For example, the simple command print ('Hello, World!') in the language Python does roughly what you'd expect: It displays the text "Hello, World!"

But here's where things get fun: Sets of these commands can be combined into packages and shared. So instead of having to painstakingly write the instructions for making a sandwich, you can just find someone else who has released their code for doing that and use theirs. Now you can just tell the computer to make me a sandwich.

Abstracting away problems in this way is the engine of progress.

Enter the digital revolution: Entire companies are now built on assembling publicly available (open source) solutions to problems, adding some of their own propietary code, and releasing additional layers of abstraction into the world.

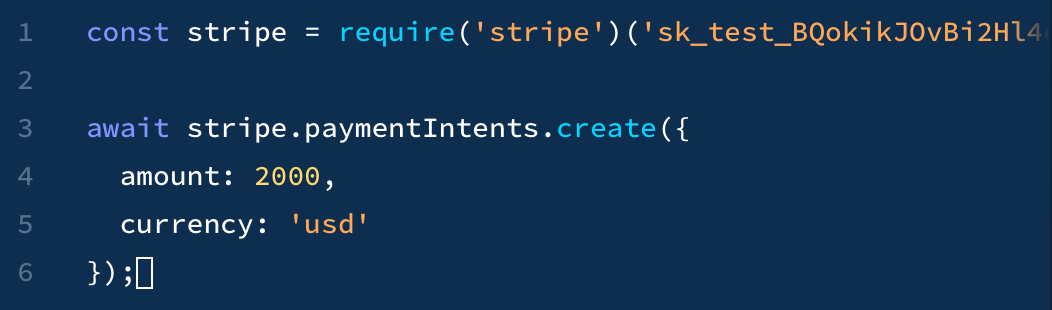

The payment processing company Stripe, for example, makes it famously easy to add payments to your app or service. Now, instead of rolling your own payment processing, you can just type something like this:

If you're not a programmer, your eyes might glaze over when you first look at the above code. But if you take a moment to try to read it, you can probably guess what it does: Tell Stripe to charge $2,000.

This process of abstracting away problems also applies to the analog world.

Nobody in the world knows every step of manufacturing a pencil from scratch. You'd have to know how to chop the wood, mine the graphite and aluminum, harvest the rubber, and cut, melt, and assemble it all into the final product. And you'd also need to know how to make all the tools for doing that!

If you were starting a pencil making company today, you'd just find suppliers who have already figured out the process of extracting the natural resources. Those suppliers have effectively created a layer of abstraction, just like Stripe has. Instead of going out and mining your own graphite, you'd just call up Graphite Mining Inc. and tell them to please send you 100 boxes.

One of the ultimate measures of progress is the number of problems we've effectively gotten rid of by abstracting them away.

In "New Technology" I wrote about how this applies to us personally: "When the solutions to our problems become so reliable they recede into the background of our lives, civilization takes a step forward."

So when you're faced with a new problem. Ask yourself: Who has already solved part of this?